Percona Toolkit Usage and Installation (PXC Part 3)

Percona Toolkit is a collection of advanced command-line (CLI) tools that are created/used by Percona to help Percona support team or users to perform a variety of MongoDB and MySQL administration tasks that can be too difficult or complex to do manually.

Part 1 of the article - Percona XtraDB Cluster (PXC) 8.0 Installation/Configuration

Part 2 of the article - ProxySQL 2 configuration/installation

Part 4 of the article - Percona Xtrabackup Backup & Recovery

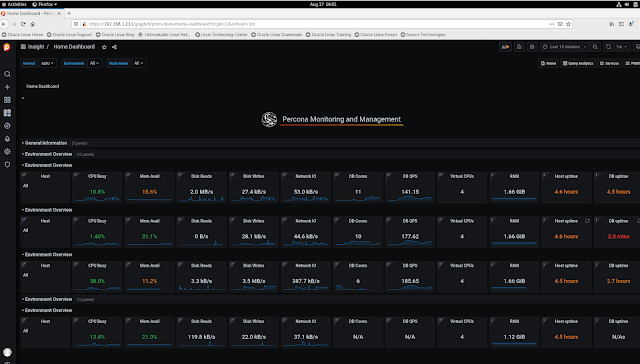

Part 5 of the article - Installation of Percona Monitoring and Management client/server

Installing Percona Toolkit (3.3)

Install Percona Toolkit using the corresponding package manager, on RHEL or CentOS:

yum list all | grep toolkit

dnf install percona-toolkit -y

If you want to download a specific tool, use http://www.percona.com/get

For example, to download the pt-slave-restart tool, run:

wget percona.com/get/pt-slave-restart

Using tools in Percona Toolkit

pt-summary # Summarize system information nicely

pt-summary

pt-summary > pt-summary.out

pt-mysql-summary # Summarize MySQL information nicely

pt-mysql-summary --user=root --password=secret

pt-mysql-summary --user=root --password=secret > "${PTDEST}/pt-mysql-summary.out"

pt-table-checksum # Checks whether master/replicas have consistent copy or not

pt-table-checksum -uroot --pSecret9

pt-table-checksum -uroot --pSecret9 h=localhost -d thirumani

pt-table-checksum -uroot --pSecret9 h=localhost -d thirumani --slave-user=replication --slave-password=Slave@123

pt-table-checksum -uroot --pSecret9 h=localhost -d thirumani --slave-user=replication --slave-password=Slave@123 --no-check-binlog-format

pt-table-checksum -uroot --pSecret9 --replicate testdb.sample h=master-host

pt-table-checksum -uroot --pSecret9 --replicate testdb.sample h=master-host -d sakila

pt-table-sync # Synchronize MySQL table data efficiently

pt-table-sync --execute h=localhost,D=thirumani,t=order_items_refund h=host2

pt-table-sync --execute h=localhost -uroot --pSecret9 --replicate thirumani.order_items_refund --slave-user=replication --slave-password=Slave@123

pt-table-sync --execute localhost host2 host3

pt-table-sync --execute --sync-to-master slave1

pt-table-sync --execute --replicate test.checksum master1

pt-table-sync --execute --replicate test.checksum --sync-to-master slave1

pt-table-sync --execute --sync-to-master h=master2,D=db,t=tbl

pt-table-sync -uroot --pSecret9 --replicate testdb.sample --print h=master-host

pt-slave-find # Find and print replication hierarchy tree of MySQL slaves

pt-slave-find --host localhost -uroot --pSecret9

pt-slave-delay # Make a MySQL slave server lag behind its master

pt-slave-delay --delay 1m --interval 15s --run-time 10m slavehost

pt-slave-delay --delay 2h h=slave

pt-slave-restart # Watch and restart MySQL replication after errors

pt-slave-restart [OPTIONS] [DSN]

pt-slave-restart

pt-slave-restart h=slave

pt-slave-restart --master-uuid

pt-heartbeat # Monitor MySQL replication delay

pt-heartbeat -h192.168.1.222 -uroot --pSecret9

pt-heartbeat -D percona --create-table --update h=master

pt-heartbeat -D test --update -h master-server --daemonize

pt-heartbeat -D test --monitor -h slave-server

pt-heartbeat -D test --check h=slave-server

pt-query-digest # Analyze MySQL queries from logs, processlist, and tcpdump

pt-query-digest master/data/slow.log

pt-query-digest --review h=host2 --no-report slow.log

pt-query-digest slow.log --no-report --output slowlog --filter '$event->{fingerprint} && make_checksum($event->{fingerprint}) eq "FDEA8D2993C9CAF3"'

pt-query-digest --create-review_table --review D=dbname,t=tablename slow.log

pt-query-digest --processlist h=localhost -uroot --pSecret9

pt-query-digest --processlist h=localhost -uroot --pSecret9 --print --no-report --interval=0.01 > slow.log

pt-query-digest --processlist h=localhost -uroot --pSecret9 --interval=0.01 --output=slowlog > slow.log

tcpdump -s 65535 -x -nn -q -tttt -i any -c 1000 port 3306 > mysql.tcp.txt

pt-query-digest --type tcpdump mysql.tcp.txt

pt-upgrade # Verify that query results are identical on different servers (before upgrade)

pt-upgrade h=host1 h=host2 slow.log

pt-upgrade h=host1 --save-results host1_results/ slow.log

pt-upgrade host1_results1/ h=host2

pt-upgrade h=host1 h=host2 queries.txt

pt-visual-explain # Format EXPLAIN output as a tree

pt-visual-explain <file_containing_explain_output>

pt-visual-explain -c <file_containing_query>

mysql -e "explain select * from mysql.user" | pt-visual-explain

pt-stalk # Collect forensic data about MySQL when problems occur

pt-stalk --function process list --variable Command --match Sleep --threshold 155 --cycles 0

pt-stalk --no-stalk --iterations=2 --sleep=30 -- --user=root --password=<mysql-root-pass>

pt-stalk --no-stalk --iterations=2 --sleep=30 --dest="${PTDEST}" -- --user=root --password=<mysql-root-pass>

pt-stalk --variable Threads_connected --cycles 2 -- --user satya

pt-find # Find MySQL tables and execute actions, like GNU find

pt-find --ctime +1 --engine MyISAM

pt-find --engine InnoDB --exec "ALTER TABLE %D.%N ENGINE=MyISAM"

pt-find --connection-id '\D_\d+_(\d+)$' --server-id '\D_(\d+)_\d+$' --exec-plus "DROP TABLE %s"

pt-find --empty junk test --exec-plus "DROP TABLE %s"

pt-find --tablesize +5G

pt-find --printf "%T\t%D.%N\n" | sort -rn

pt-find --noquote --exec "INSERT INTO sysdata.tblsize(db, tbl, size) VALUES('%D', '%N', %T)"

====================================================

pt-align # Aligns output from other tools to columns

pt-archiver # Archive rows from a MySQL table into another table or a file

pt-archiver --source h=host,D=db,t=child --purge --where 'NOT EXISTS(SELECT * FROM parent WHERE col=child.col)'

pt-archiver --source h=oltp_server,D=test,t=tbl --dest h=olap_server --file '/var/log/archive/%Y-%m-%d-%D.%t' --where "1=1" --limit 1000 --commit-each

pt-archiver --source h=127.0.0.1,D=sakila,t=payment --where 'rental_id > 1000' --limit 100 --commit-each --purge --dry-run

pt-archiver --source h=127.0.0.1,D=sakila,t=payment --where 'rental_id > 1000' --limit 100 --commit-each --purge --progress=100

pt-archiver --source h=127.0.0.1,D=sakila,t=payment --dest h=12.0.0.1,D=sakila_archive,t=payment --where 'rental_id > 1000' --limit 100 --commit-each

pt-config-diff # Diff MySQL configuration files and server variables

pt-config-diff h=host1 h=host2

pt-config-diff /etc/my.cnf h=host1

pt-config-diff /etc/my-small.cnf /etc/my-large.cnf

pt-deadlock-logger # Log MySQL deadlocks

pt-deadlock-logger h=host1

pt-deadlock-logger h=host1 --iterations 1

pt-deadlock-logger h=host1 --dest h=host2,D=percona_schema,t=deadlocks

pt-diskstats # An interactive I/O monitoring tool for GNU/Linux

pt-diskstats [OPTIONS] [FILES]

pt-diskstats

pt-duplicate-key-checker # Find duplicate indexes and foreign keys on MySQL tables

pt-duplicate-key-checker --host host1

pt-fifo-split # Split files and pipe lines to a fifo without really splitting

pt-fifo-split --lines 1000000 hugefile.txt

while [ -e /tmp/pt-fifo-split ]; do cat /tmp/pt-fifo-split; done

pt-fingerprint # Convert queries into fingerprints

pt-fingerprint --query "select a, b, c from users where id = 500"

pt-fingerprint /path/to/file.txt

pt-fk-error-logger # Log MySQL foreign key errors

pt-fk-error-logger h=host1

pt-fk-error-logger h=host1 --iterations 1

pt-fk-error-logger h=host1 --dest h=host2,D=percona_schema,t=fke

pt-index-usage # Read queries from a log and analyze how they use indexes

pt-index-usage /path/to/slow.log --host localhost

pt-index-usage slow.log --no-report --save-results-database percona

pt-ioprofile # Watch process IO and print a table of file and I/O activity

pt-ioprofile [OPTIONS] [FILE]

pt-ioprofile

pt-kill # Kill MySQL queries that match certain criteria

pt-kill --busy-time 60 --kill

pt-kill --busy-time 60 --print

pt-kill --match-command Sleep --kill --victims all --interval 10

pt-kill --match-state login --print --victims all

mysql -e "SHOW PROCESSLIST" > proclist.txt

pt-kill --test-matching proclist.txt --busy-time 60 --print

pt-mext # Look at many samples of MySQL SHOW GLOBAL STATUS side-by-side

pt-mext -r -- mysqladmin ext -i10 -c3

pt-mext -r -- cat mysqladmin-output.txt

pt-mext -r -- mysqladmin ext -i1 -c4

pt-mext -r -- cat 2021_05_01_12_55_30-mysqladmin > ptmext.out

pt-online-schema-change # ALTER tables without locking them

pt-online-schema-change --alter "ADD COLUMN c1 INT" D=sakila,t=actor

pt-online-schema-change --alter "ENGINE=InnoDB" D=sakila,t=actor

pt-online-schema-change --alter-foreign-keys-method auto --alter "add key actor_last_update(last_update)" --execute h=localhost,D=sakila,t=actor

pt-pmp # Aggregate GDB stack traces for a selected program, a poor man’s profiler

pt-pmp [OPTIONS] [FILES]

pt-secure-collect # collect, sanitize, pack and encrypt data

pt-secure-collect [<flags>] <command> [<args> ...]

pt-secure-collect collect <flags>

pt-show-grants #Canonicalize and print MySQL grants so you can effectively replicate, compare and version-control them

pt-show-grants

pt-show-grants --separate --revoke | diff othergrants.sql -

pt-sift # Browses files created by pt-stalk

pt-sift FILE|PREFIX|DIRECTORY

pt-table-usage # Analyze how queries use tables

pt-table-usage [OPTIONS] [FILES]

pt-variable-advisor # Analyze MySQL variables and advise on possible problems

pt-variable-advisor localhost

pt-variable-advisor --source-of-variables vars.txt

pt-mongodb-query-digest # reports query usage statistics by aggregating queries from MongoDB query profiler

pt-mongodb-query-digest [OPTIONS]

pt-mongodb-summary # collects information about a MongoDB cluster. It collects information from several sources to provide an overview of the cluster

pt-mongodb-summary [OPTIONS] [HOST[:PORT]]

pt-pg-summary # collects information about a PostgreSQL cluster

pt-pg-summary [options] [host:[port]]

pt-k8s-debug-collector # Collects debug data (logs, resource statuses etc.) from a k8s/OpenShift cluster. Data is packed into the cluster-dump.tar.gz archive in the current working directory

pt-k8s-debug-collector

Related Percona/MySQL Articles: Percona XtraDB Cluster (PXC) Configuration Percona Xtrabackup Full/Incremental Backup & Recovery